Big cloud providers these days report power "overhead"

(i.e., non-computing energy spent for cooling or power

conversion) has dropped around 10%, hence the next step

for further efficiency will consist in dynamically shaping

power consumption according to actual load.

Big cloud providers these days report power "overhead"

(i.e., non-computing energy spent for cooling or power

conversion) has dropped around 10%, hence the next step

for further efficiency will consist in dynamically shaping

power consumption according to actual load.

Unfortunately, this is not straightforward to achieve in

practice. Consolidation can be used to keep only running

the minimal number of servers to accommodate the sold

capacity. However, it is worth pointing out that the above

relationship should build on actual computation intensity

(i.e., CPU usage) rather than the resource size (mainly,

number of CPUs and amount of RAM), as someone might

be tempted to do. It is undisputable that every interactive

service (excluding therefore intensive computation on large

bulks of data) is subject to large deviations of incoming

requests, with typical hourly, daily, weekly, and even

seasonal periodicity. The so eulogised cloud elasticity is

only effective with the longer timescales, since the time to

technically and, most of all, administratively provisioning

and de-provisioning resources is in the order of days.

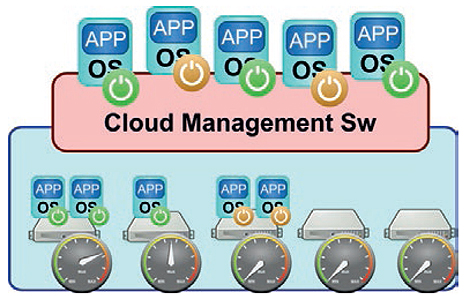

LET'S USE POWER STATES FOR VIRTUAL MACHINES!

Waiting for more concrete demonstration of the technical

effectiveness and usability of emerging technologies

like unikernels, real-time resource provisioning remains

a chimera today. As a matter of fact, the deployment of

additional (idle) resources is the only viable solution for

critical business services that require high-availability/QoS

both in case of failure and peaks of workload. That means

cloud users are somehow forced to pay for something that

might only be used occasionally, while the effectiveness of

any energy-efficiency consolidation is partially undermined.

"Let's not put high-performance servers into a permanent

idle state!" was the title of a recent article in this same

magazine1, which debated on the efficiency of low-power

idle states for servers. We would like to continue the

discussion with an additional challenging call: Let's use

power states for virtual machine!

But, what is the meaning of power state (e.g., active,

suspend-to-ram, suspend-to-disk) for a virtual resource?

Well, it cannot directly save energy as happens in real

hardware, but it is an effective trigger for the management

of the underlying physical infrastructure. Basically, it says

that the resource will not be used before it gets resumed.

THE ARCADIA USE CASE FOR ENERGY EFFICIENCY

The ARCADIA project has developed an innovative

framework for development, deployment, and management

of highly-distributed cloud applications. Through policydriven

orchestration, the framework supports life-cycle

operations: re-configuration, horizontal and vertical scaling,

replication, etc. A specific Use Case has been implemented

for energy efficiency, which chases more efficiency by

changing the power state of virtual machines according to

the evolving context. An energy-efficiency module extends

OpenStack, by gathering active VMs together into the

smallest number of servers, and putting all other servers

into suspend-to-ram mode. The project will shortly carry

out functional and performance evaluation for a video

transcoding application.

Contact Details:

Matteo Repetto, CNIT

Email: matteo.repetto@cnit.it

Project web: www.arcadia-framework.eu

Project twitter: @eu_arcadia

1. Cecilia Bonefeld-Dahl, “Let’s not put high-performance servers into a permanent idle state!,” European Energy Innovation, Summer 2017, pp. 32-33.

This project is funded by the EU's H2020 Programme under GA no. 645372